Original: https://aprenent.substack.com/p/mhe-flipat-amb-replit-2-de-7

This is the continuation of the post: https://thelearningrub.substack.com/p/im-crazy-about-replit-1-of-7

The idea

To put it simply… I have a history with Pokémon, and I recently got hooked on the mobile trading card game. Of course, what happens when you are a developer and even have a past as a mobile app developer? Well, you are never satisfied with other people's apps, you always find some little thing that is missing and you say to yourself “wow! It wouldn't be that hard to do!” ERROR, as the meme says: “Developers do things because we think they are easy to do” (and then we see that it has a lot of side effects, it is a lot of work, but we cannot back down for the sake of honor).

And what was I missing? Well, it turns out that the cards have a series: “Pikachu”, “Charizard” and “Mewtwo”. And you open envelopes of one of these types. Sure, when you have few cards, it doesn't matter if you open one type or another, but when you start accumulating them, you say, which envelope should I open? From which series do I miss the most cards and have the best chance of getting new ones? Well, surprisingly, the game doesn't give you this information. “We already got it”. And that's how the motivation to do this was born: a “something” where I could keep track of how many cards of a series I'm missing. “Make an Excel and don't get involved!” you'll say. But if you're a developer, you know perfectly well that Excel is one of our kryptonites.

I was going to calmly chat with ChattyGPT, and then I came across Mr. Amjad, who I already talked about, so off to Replit de cap!

First challenge: getting the data

Pokémon information on the internet? Impossible not to find it! Because I use DuckDuckGo, which if I hadn't clicked the "I'm going to be lucky" button. Wikidex had everything I needed. Let's play.

What was my surprise when I saw that Wikidex allows you to edit the wiki without even being identified (I guess when saving data it will tell you: believe it). Therefore, I save myself from complicating my life with web scraping issues.

Let's play with Replit!

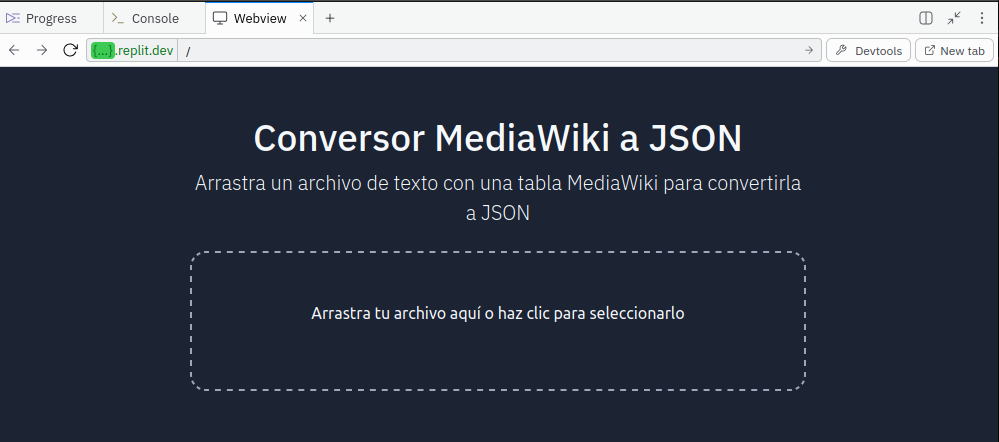

From Wikidex I could copy a MediaWiki data table with everything I needed, and more. But this format is not suitable for using it programmatically, so I needed a MediaWiki table converter to JSON, something unusual, I wonder if there is a tool somewhere, but it would take away the fun and I would have to learn how to make it work, so “Let's build!”

And this was my prompt, nothing elaborate, quite the opposite.

Crea una aplicación web a la que subir un fichero de texto con una tabla en sintaxis MediaWiki haciendo drag'n'drop y la convierta en un fichero json respetando todas las propiedades de la tabla. Finalmente debe ofrecer un enlace para la descarga del fichero jsonAnd I leave you with a screenshot of what the agent did.

Which resulted in this web application that did exactly that, you dragged a .txt file with a table in MediaWiki format inside and it returned it to you in JSON.

Spectacular, although… it's not something that a ChatGPT wouldn't be able to hit on the first try, in fact, behind Replit there are models from OpenAI and Anthropic (and probably our own). In other words, in the end the intelligence of the LLM is what it is, we are going a little further, but it is not an AGI.

I won't lie to you, the v1 of this converter was not useful, the JSON it was outputting was quite macaronic, having items with pieces of the MediaWiki table styles that were present in the source .txt, so I asked the Agent for an improvement with much more intent.

And that's it, 2nd iteration and the tool working 100% for my needs.

One note, this converter is not published, therefore, there are no hosting costs and all the fishing, only the $0.25 of the Agent's performance.